Prologue

The blockchain space is full of non-sense precisely because it is full of promise. It attracts top minds who constantly put out revolutionary ideas in various domains: robust P2P systems, financial primitives, zero-knowledge proof systems, coordination and distributed governance mechanisms, and many more. This intrigues the curious and adventurous type from the general public who typically have grievances from meatspace and are yearning for a new paradigm -and maybe hoping to get hilariously rich along the way. This in turn attracts opportunists who put together "narratives" for changing the world for the better using this technology -but only after they take your money of course. There is so much potential that it is fairly easy to create these narratives by scrambling together a word salad that only superficially relates to genuine R&D work.

This firehose of content, both technical and non-technical, both malicious and benign, is produced by a diverse portfolio of participants: researchers, developers, traders, venture capitalists, thot leaders, hackers, and scammers. And they are all engaged in a massively multi-player multi-level game that is too complex to play, let alone analyze and win.

Developers want to win by building on the right platform, choosing the right stack, and targeting the right niche. System architects want to win by building durable stacks and choosing the right abstractions. Researchers want to win by focusing on the right (sub)fields. Observers want to win by making money from all this activity, whether by finding gold or selling shovels.

The casual observer tries to develop a mental model to guide her through this, often reverting to a "safe middle" ground. We all like to think we're reasonable people, "there is truth to all sides". But the middle in crypto is always shifting, and it is often the wrong place to be.

The Birth of Alt

The bomb shell that is the Nakamoto consensus is a paradigm shift in human coordination. It demonstrated the possibility of eliminating intermediaries and (re)defining the essence of virtual scarcity and the complex ways it can be composed and exchanged. The imagination it ignited quickly outgrew the specific implementation that is Bitcoin, whose limited and badly designed virtual machine (from a software engineering PoV) could not facilitate what a lot of dreamers wanted to build.

In those early years, the "multichain" was basically made up of "Bitcoin but with { different mining algo | dedicated marketing department | privacy | governance}". With the exception of Zcash, which still has a vibrant ZK R&D community, the rest are almost all now zombie chains in terms of R&D activity or adoption.

Just like Bitcoin, the VM in “coin chains” is not amenable to compile higher-level code into it, and so to this day there are basically zero dApps on any of these coin networks. The case against app-specific (i.e. send-receive coin) blockchains:

There is more to money than send-receive. Money as a product will flourish in an environment that facilitates DeFi, "money wants to work".

The mental overhead from volatility of these coins means that, at best, they will only ever be useful as a commodity, the popularity of stablecoins more that has proven this.

Even in coin chains that have genuine R&D, such as Zcash, it is doubtful they can compete with the fully programmable and stateful Aztec for example.

Doge will probably survive as an NFT-like asset that only gets more endearing with time.

Ethereum Killers: a Short Story

Enters Ethereum. Novel applications started getting built even before Ethereum launched, using alpha versions of compilers that take Javascript-like code in a language they called Solidity. The ease with which intentions can be turned into code was refreshing to those who may have attempted and failed at wielding the horrendous Bitcoin Script to their will. The ERC20 standard contract fired up the first viral use case on Ethereum as a fund-raising platform.

Around this time of 2015-2016, there were two buzzing narratives besides Ethereum's "world computer". One was "Blockchain not Bitcoin" that some early enterprise experimenters were advocating for. It didn't take long for this narrative to be completely choked by the the first PMF that Ethereum had as a crowd-funding mechanism, i.e. the subsequent ICO boom.

The second narrative was "Ethereum is a sh!tcoin, Bitcoin sidechains are the future". Bitcoin sidechains are potentially the biggest nothing-burger in the history of blockchain. The fundamental issue is that the limited programmability of Bitcoin's VM meant the near impossibility of coding up trust-minimized on- and off-ramp bridges between mainnet Bitcoin and its sidechains. Ethereum sidechains would receive a similar tepid reception. This is because sidechains neither provide the trust-minimization of other budding layer-2 solutions, nor embrace their truth as independent layer-1 blockchains themselves.

The "Ethereum but scalable" narrative escalated in 2017-2019-ish. The typical script of many whitepapers and blogs/podcasts in 2017-2018 goes something like this:

"Ethereum is good, but did you see how Crypto Kitties clogged the network, lol? Check out my new consensus algo and buy my token on your way out".

To researchers and developers, this was hilarious because the consensus algorithm is not the bottleneck. How a distributed network comes to a consensus is not the issue. The amount of resources a node has to spend is the issue. The higher the requirement to follow and verify a chain, the less nodes can join, and the less security and censorship resistance guarantees overall.

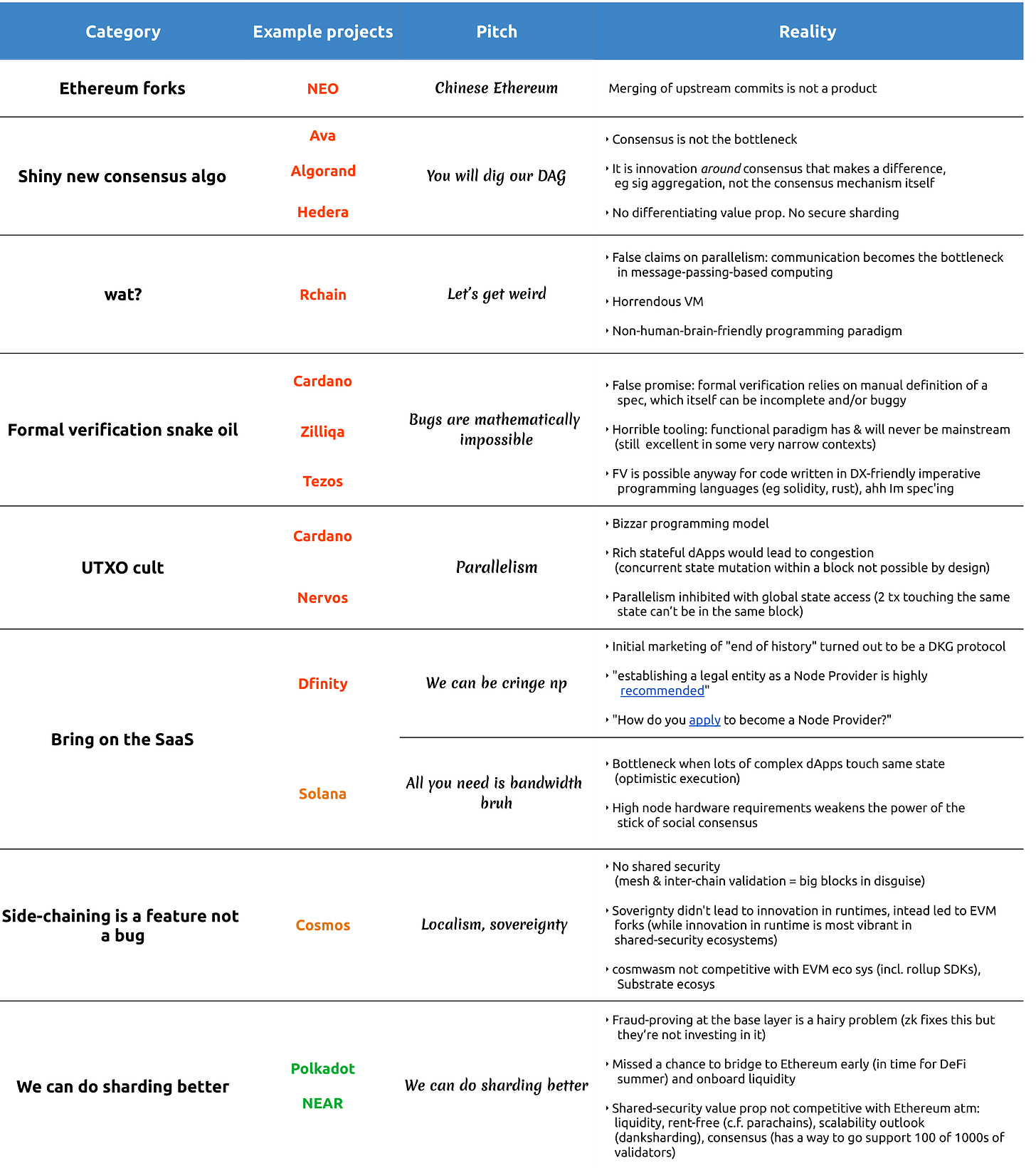

But the ICO fever was sweeping, and the only tune that mattered was "cha-ching!" on repeat. The alt-coin wave was waning and the new alt-L1 wave was heating up. There were different flavors to the wave of Ethereum killers. The table below provides some examples:

There was one project that pre-dated the Ethereum killer wave, Tezos, which was and still is a hobbyist project for functional programming fans. Its tooling is not up to any serious software engineering standards and has never had, and will never have, any developer adoption unless its VM is gutted out and replaced. I talked about this before and got some people triggered. Only in the blockchain space can you get away with stuff like this.

The Modularity Industrial Complex

Early layer-2 R&D

Scalability has been a central concern in the Ethereum ecosystem and (less productively) the ecosystem at large since the early days. The basic idea behind building what's now called optimistic rollups, which Vitalik called "shadow chains", has been out there since 2014.

Around the same time, two students presented a complementary idea called "Arbitrum" in a dimly-lit university basement. It is an interactive challenging game between an off-chain operator and a whistle-blower to ascertain (on L1) whether the off-chain computation contained fraud . This nifty trick allows the verification of a fraud claim pertaining to previously optimistically posted batch of transactions without having to re-execute the entire batch. The issue of replaying off-chain execution on L1 will later plague many variants of the Plasma construction (more on this below).

So the basic ingredients for a viable scalability design pattern was right there for anyone to build since the early days.

But the scalability R&D was largely sidetracked into other avenues until at least 2018, as the attention of most devs and researchers was being consumed by:

Base-layer sharding using fraud proofs which, to this day, have never been spec'd out concretely let alone implemented in the context of any L1. It turned out to be an extremely hairy problem in situations where the network gets partitioned. Later, Ethereum would ditch this whole idea, throwing the ball to independently-implemented shards aka rollups. Polkadot and NEAR continued to aim for -but never fully succeed at- L1 sharding.

State channels which either necessitated deploying "watchtowers" in case one of the two parties loses connection and the other tries to submit a stale state of the channel. But then who watches the watchers? Rollup fixes this, but researchers were not done pussy-footing about. They needed to get some plasma out of their system first.

Plasma is an almost-rollup design pattern. There are many variants, but the main deal breaker is that challenging a malicious plasma operator required posting too much data too many times. Things that can go wrong: operator withholding data, L1 congestion making the posting of massive plasma state snapshot expensive, malicious users DoS'ing the operator with frivolous fraud flags ... it's a mess.

Meanwhile, many projects threw in the towel and joined a cult worshipping a golden calf named sidechain. You can refer to this article for more on sidechains, state channels, and plasma.

Rollup enters the chat

It was becoming increasingly clear that the fundamental problem at hand is data availability. If the necessary data needed to independently reconstruct the L2 state is guaranteed to be accessible, coding up law enforcement on L1 to compel an L2 to a single truth is straight forward conceptionally, even if the implementation is a herculean task.

The term "rollup" captured the new but in fact old paradigm. The term was invented by BarryWhiteHat when he casually pushed a repo he called "roll_up". In fact, he just invented ZK Rollups (ZKRUs). Soon after Vitalik refined the idea and Brecht implemented the first dApp-specific ZKRU (Loopring DEX).

Once a ZKRU batch is submitted and accepted by L1, it's final (to the extend that the L1 block it was included in is final). The execution of the L2 EVM emulator is validity-proven, and the proof (a zkSNARK) is verified on L1 with each state update.

Then, things escalated quickly as new proof systems started appearing, paving the way for general-purpose computing in ZK. The speed by which practical general-compute in ZK became a reality surprised everyone. 2019-today represents a golden era of ZK R&D. More on ZK in the last section.

The qualifiers “optimistic” and “ZK” are now used to distinguish the two instantiations of this “rollup” paradigm: off-chain-compute on-chain-data-and-validation.

Arbitrum continued quietly working on their interactive fraud prover, while optimism argued vehemently for a non-interactive fraud prover ("OVM") where the L1 contract would, in a single call, verify the validity of a fraud report, gut out the the bad state, and punish the offending L2 operator. This approach will prove complex:

Discrepancies between opcodes on L1 and L2 complicate both compatibility with the EVM and fraud proving arbitration (OVM modified the solidity compiler).

Optimism will later ditch this approach for interactive fraud fault proofs which is identical architecturally to Arbitrum's (not that they would give Arbitrum any credit).

Interactive fraud fault proofs

An interactive fault arbitration dance leads to a single moment-of-truth validation of a single transition from one opcode to another, on L1. What opcode and where? The opcode is in a past execution of the EVM-emulating core of the L2 node. The latter does a lot of stuff around this core emulator. Take the hairy problem of determining how much it will cost to commit the next batch of data to L1. The L2 node can probe the L1 node (which obviously it’s running too, to detect deposits for example). But what if the L1 fees spike immediately before the L1 operator broadcasts its next batch? So the L2 node has to do fancy algorithmic stuff (maybe some stochastic process simulation) to predict and fairly amortize the L1 data cost. Note that L2 has gas costs of its own on top of that. This is because a rollup may be congested independently of L1. For EVM-compatible rollups, they just use the same EIP1559 mechanism just like L1. However, the overall cost is dominated by L1’s data cost, and congestion on an L2 clearly contribute to higher gas cost of L1 blocks as well.

In the latest Arbirtum version they compile Geth to a modified WASM and so the dispute is eventually over an opcode of this WASM-ish binary. Some confuse the state transition within the EVM-emulating L2 node with the state transition of the rollup overall. It's subtle, but this is wrong. The rollup L1 contract takes the output of the L2 EVM emulator and advances the tip of the rollup on L1. That is where the ultimate source of truth is. If someone fires up an L2 node, they sync against the L1 state -they don't need to communicate with L2 nodes at all. If a million trees fall on L2 and L1 didn’t hear it, it didn’t happen.

The foreplay dance between sequencer/proposer and whistleblower in optimistic rollups is intended to corner both into that one crucial opcode. Briefly and handwavingly:

The whisleblower raises the flag on L1: "a fault has been committed in this batch".

L1 contract: "is the fault in the first (H1) or the second (H2) half of the batch?"

Whistleblower: "H1" (for example)

L1 contract: "dis true, sequencer"?

Sequencer: "would you believe me if I said no?"

L1 contract: "is the fault in the first or the second half of H1, whistleblower"?

Whistelblower: "H2"

If size(H2) > 2, H = H2. GOTO 2

Re-execute Hx and validate the fault claim

This interactivity is between a whistleblower, a sequencer, and the L1 fraud-proving contract on L1. An "interaction" is simply an L1 transaction.

The size of the batch keeps getting bisected till Hx contains only two instructions, i.e. a single transition (x ~= log2(faulty_batch_size)). At this point the L1 contract re-executes that single transition and verifies if it were indeed faulty. If it is, it awards half of the sequener's bond to the whistleblower, and burns the other half. If it isn't, the whistleblower loses (a much smaller but still stingy) bond she put up at the very start. The reason some of the award gets burned it to make self-reporting by the sequencer non-free.

Optimism 2.0 intends to have the same basic fault proving mechanism, except that their L2 emulator is Geth compiled to MIPS (another VM compilation target). They haven't deployed it yet (and I didn't check if there's something on testnet, idk), and it's making a lot of people question their right to call themselves a rollup. They probably will eventually.

The L2 EVM-emulator must be deterministic for two reasons:

Syncing an L2 node must lead to the same state originally committed

Fraud proving and arbitration. Remember that the validity of a fraud claim ultimate comes down to a single execution step of this emulator.

Posting data

When Alice sends ETH to Bob, she broadcasts to the network a blob of binary data that contains these fields: from (Alice's address), to (Bob's address), amount, signature (Alice's signature using her private key), and (optionally) a message like "thank you for fixing my sink" in the data field.

If Alice were sending USDC instead, she would put the the address of the USDC contract in the ‘to’ field, with the ‘amount’ being zero because she's not transferring anything to the contract itself (a non-zero amount would mean Alice is sending ETH to the USDC contrac itself, a common user mistake). In the ‘data’ field, she would put the name of the transfer function in the USDC contract and Bob's address "transferFrom(from:Alice, to:Bob, amount)". Because functions names can have different lengths, the hash of the function name and its arguments is applied, and the first few bytes are used as a fingerprint by the EVM to identify it.

The rollup sequencer does something similar. She sends a transaction whereby the ‘to’ field is the address of the rollup contract. in the ‘data’ field, she specifies the fingerprint of the function (something like "updateState") to be called in that contract. As input to that function, she appends a blob of data: updateState(L2 data blob). This data is mostly just logged by the L1 contract, the EVM doesn't execute what's in the belly of this data. This data contains all the L2 transactions emulated on L2. This is where the scalability comes from: a batch of transactions are being enshrined on L1 without the EVM actually executing them.

What is a rollup though

So something is a rollup if:

Data is fully available on L1

Anyone can sync L2 state (open source node)

State advancement contract on L1

User self-exit contract on L1

Opt-in upgrades

“1” is a prerequisite for 2-4

“2” is a prerequisite for 3-4

“3” is a prerequisite for 4

Discourse around rollups

The discrepancies between these ideal features and what is implemented in practice is a fertile ground for concern trolls and engagement farmers. They would point to, say, the fact that the upgradability of the rollup L1 contracts is controlled by a multisig. Projects would then argue back and say that this is necessary in early stages in order to respond quickly to potential bugs, so this is a matter of responsible deployment. Of course some projects are taking the upgradability issue more seriously than others.

Another serious deviation from these ideal feature is a closed-source prover (ZKRU) or a missing fault proving and arbitration (ORU) L1 contract. Projects would argue back and say something about security, protection of intellectual property in early deployments, or gradual deployment (“we want to work on onboarding and boostrapping an ecosystem, while adding critical components gradually”).

It would be very irrational for a rollup project to invest this much capital into building these complex L1 & L2 machinery only to degrade itself to a sidechain. Control is an expensive and dangerous liability. So it is not a bad idea to sit out these arguments, give the various teams sometime to test the waters and get their deployments completed and battle-tested. Importantly, one should not use an argument against a specific rollup implementation to dismiss the rollup design pattern itself.

In general, be suspicious of jargon-addled or highly-abstract polemics around rollups. While implementations are very complex, the design is really simple (here is ZK Rollups in a brief for example). And you can judge for yourself the extent to which something is (on its way to being) a rollup or not by examining the (1)-(5) criteria in the previous section.

Rollups and bridges

Some reduce rollups to the notion of a validation bridge, which I think misses a big part of what rollups are:

Clearly some assets can be native to the rollup, which makes the idea of ‘bridge’ confusing

L2 state updates can encapsulate infinitely many other varieties of logic, such as aggregated oracle updates or governance-controlled outcomes the result of which can trigger L1 actions.

Soverign rollups

So there are three aspects to building a rollup: data, sequencing, and settlement and law enforcement.

Sovereign rollups outsource data availability to an L1 like Ethereum or a data-availability-specialized L1 like Celestium. But the sequencing and settlement is handle by the sovereign chain's validators who, say, run an off-the-shelf BFT consensus like Tendermint. So is it an L1 or a rollup?

Advocates for calling them rollups argue that as long as there's a light client implementation that users can run (as part of their wallets for example), then they can reject any malicious state update and fork off. And they would be able to continue extending the chain because all they need is the data, which is available independent of the malicious SR validators. Users could also use the data to exit bridged assets on other chains. For example, Alice's light node uses data from Celestia to create a Merkle proof that she owns bridged Dai on Ethereum, and she proceeds to exit it. This of course assumes that the SR built a forced-exit contract on other L1s that can consume such proofs.

There is a lot of nuance:

The overall security here is that of min(DA, settlement). Assuming the steel-man case where the SR implemented a bridge on other L1s with self-exit machinery, the security around those assets (say WETH on Ethereum) is not that of Ethereum, it's that of the DA layer.

What if the DA is Bitcoin, does that make the SR even more secure than Ethereum-tethered rollups?

For bridged Bitcoin itself, this is unlikely, because it is fairly impossible to code-up self-exit contracts on Bitcoin.

For bridged assets from other chains, say from Ethereum, the overall security is min(Bitcoin, Ethereum) assuming self-exit contacts are coded up on Ethereum (which in this case would consume data roots from Bitcoin).

For SR-native assets, no, Bitcoin cannot compel the SR validators to be non-malicious. Assuming SR light-client exists, users would have to fork-off using data from the Bitcoin blockchain. Compare this to Ethereum-tethered rollups where it's the rollup sequencer that would have to fork off. In other words, with SRs everyone can follow their truth, while with cannonical rollups everyone is compelled to follow a single truth.

This is not to say SRs are not amazing. They have the freedom to innovate and create or adapt other runtimes without worrying about EVM-compatibility. They can also reduce DA costs by posting to cheaper and/or DA-specific L1s (although Ethereum is en route to becoming a cheap DA layer as well). Ideally the focus should be on highlighting these advantageous properties, as opposed to trying to equate an SR with Ethereum-tethered rollups (again, in their final form adhering to (1)-(5) above).

>_ ENDGAME INITIATE: The Dawn of ZK-everything

We have known how to compute "something" in ZK since the 80s, like proving knowledge of the private key that correspond to public key. And we have in-principle known how to prove more general computations in ZK since the 90s, like proving we know the prime factors of a number, or the most optimal backpacking path in Europe that visits every city of interest. We have known -in theory- since the 90s how to zk-prove almost any computation that a modern laptop is capable of computing. There is basically no limit to what can in-principle be computed in ZK -though we may require multiple quantumly-entangled provers if we are doing a super hard computation -nothing we need to worry about for 99.9% of practical uses.

But more improvements were needed in terms of the size of proofs and the time it takes to verify them in order for any of this to become practical. The machinery behind proof systems of the 90s and early 2000s under the umbrella of PCP line of research were too complicated and/or insufficiently cheap to prove and/or verify.

Computing in zk proceeds in 3 main phases:

Reduce: programmers express their intents in a human-readable language, and the compiler reduces it to a lower-level equivalent representation called assembly, which is then further reduced to an machine instruction set, which is finally and concretely reduced to billions of transistor flickers. You are familiar with this notion because you do know there is no actual cat inside the computer when you’re watching a YouTube cat video.

In zero-knowledge, we introduce a special intermediate representation in this pipeline: a system of polynomials. The polynomials evaluate to zero at certain points if and only if the original computation was done correctly against a mix of public and private inputs. This notion of reduction is very instrumental in understanding theoretical boundaries of the (im)possiblity and tractability of not only computation, but mathematical truths generally.Prove ("compress"): we take the polynomials and apply mathematical operations that allows us to assert facts about them without actually evaluating them. If we are asserting facts about the polynomials, then we are asserting facts about the original equivalent computation that we reduced from. It is at this step where a lot of the complicated zkSNARK machinery is applied to eg: bind the prover to this exact system of polynomials, probing the polynomials -indirectly- to assert they evaluate to y at some point x, etc.

Verify: the compression produces two main artifacts (a) the input (private "witness" and public inputs) that the computation was executed against, and (b) a proof, which is typically bytes-kilobytes of data. With these two, we can assert facts about the compression step, and therefore indirectly about the original higher-level computation we reduced from, without running the entire computation (or evaluating its equivalent system of polynomials at all the points -think (simplifying): 1 point evaluation per step of computation. An example typical operation involved the verification stage is MSM.

So up until ~2010, the size of the data (witness) and/or verification time were very impractical to make the whole thing worth it -might as well run the original computation to verify.

The Cambrian explosion of ZK runtimes

In early 2010s, this hurdle started getting overcome in successive improvements to all three phases above. In the reduction phase, a new intermediate representation (R1CS) was introduced. It was general enough to reduce onto from a more familiar representation, arithmetic circuits, which themselves can straightforwardly reducible onto from an even more familiar representation (Boolean SAT).

The reduction phase produces polynomials that are "woven out" of the R1CS system of vectors. The circom compiler is a practical implementation of these series of reductions from arithmetic circuits -> R1CS -> Polynomials (QAPs). What is guaranteed after the reduction procedure is this: the polynomials evaluate to zero on inputs X if and only if the system of R1CS vectors is satisfied if and only if the arithmetic circuits were evaluated correctly on that mix of public and private input.

In the proving and verification phases, the breakthrough was harnessing pairing to compress both the witness size and the verification time. These improvements culminated in the landmark Groth16 proving system (described more succinctly here). Zcash was the first to take advantage of this by creating a Groth16-based app-specific circuit (coin-transfer in ZK).

The ecosystem has been leaping forward at an accelerated pace since 2019, and absolutely no one expected this innovation to happen as fast as it did. The garden variety of proof systems that came after Groth16 continue to innovate in all the three phases. For example, Plonk allowed for a more flexible (custom gates) intermediate representation that resides between the human-readable computation and the series of polynomials. This was a big improvement over the rather rigid intermediate representation in Groth16 (2-fan-in R1CS). Its flexibility has a deeper theoretical implication as well: it is Turing-complete, while Groth16's R1CS is NP-Complete. This is not a big deal or a game changer for the vast majority of blockchain-related computations. After all, no is trying to perform protein-folding on-chain.

But Plonk also eliminated the need for a per-app trusted set-up that Groth16 requires, which is a massive implementation and deployment undertaking.

The Halo2 proving system was built on a variant of Plonk, and it is the basis for a couple of zkEVM projects like Scroll and Taiko -both of which build upon the zkEVM from the EF’s PSE group. Other Plonkish instansiations include Polygon’s zkEVM who created their own layer of abstraction. They are also working on Miden, a STARK-based VM. If the polynomial commitment scheme in a Plonkish implementation uses FRI, then it becomes sort of a STARK. So the boundaries between these proving systems can be blurred. Although zkSync is also Plonk-ish, they “capture the EVM” at higher level of the stack, taking the output of Solidity/Vyper compilers and reduce them to an LLVM intermediate representation. This allows them to take advantage of optimizations in LLVM compiler toolchains, but also comes at the expense of some incompatibilities with the EVM.

An interesting new reduction approach is that of RISC-0, where they do the necessary zk reductions to the well-known RISC-V instruction set. This cleanly separates what’s above, namely code in any programming language that can be compiled to RISC-V, from modifications or upgrades to the the entire zkSNARKing machinery involved. It also works the other way around: if a new opcode is added to the EVM tomorrow, RISC-0 wouldn’t have to update its zk reduction machinery because the new, say rust-based EVM client, can just be re-compiled again to RISC-0. Others are working on a similar approach for zk-WASM [0] [1] [2] which can also be a compilation target of many languages. This approach of pushing the reduction phase down the stack as possible is a very likely winner.

Similar to how innovations and simplifications of hardware instructions sets in the ~80s led to a cambrian explosion at higher levels (personal computing, ERPs for the enterprise, ...), the ever maturing "ZK instruction sets" or "zk-provable compilation targets" is going to transform the blockchains on various fronts: scalability, privacy, and programmability.

A lot more activity is happening [1] [2] [3] in general-compute ZK and other corners. We will look back at this time as one of the most exciting R&D period in tech history. The above overview leaves out a lot of details and important developments that have been happening in recent years. But you get the idea.

The impending revolution: ZK SDKs

One of the first decisions a software developer does is deciding which libraries to import. The most commonly needed utilities are typically shipped in the standard library of the programming language, like (de)serializing data or networking stuff for example. The very thing that the developer is building may be intended to be used as a (collection of) libraries itself, “here is my amazing SDK to help you build other amazing SDKs”, she might say.

Incredible ZK SDKs are now emerging [1] [2] to give dApp developers wings. Imagine: zk-abstracting away on-chain access and cross-chain messaging using storage proofs. This is going to change the world and 99% of the people won't know why -which is good!

It makes sense that these SDKs are only emerging now. The dust from the cambrian explosion of proof systems (previous section) had to settle a bit before they could be built. Indeed, these libraries harness proof systems to provide custom ad-hoc proving and verification utilities to complement the overall business logic of a dApp.

Currently, Ethereum contracts can access to the state (the balance of Alice is X, or the interest rate of USDC lending on X is Y), but not history (the balance of Alice on day X is Y). But even if they did, it would still be expensive to digest this histroy to compute any sort of useful business logic.

ZK fixes this. Through these SDKs, we can create succinct proofs attesting to some piece of Ethereum history, such as:

This address has interacted with DeFi protocols X times over

The 50-day exponential moving average of ETH/USDC is X

The off-chain trading simulation algorithm X has been run correctly and its recommending "Buy".

The median ETH/USDC in the top 5 CEXes is X (assuming each CEX has an official public key they sign tickers with).

There has been X slashes of Ethereum validators over period Y.

ZK SDKs will one-day permeate the enterprise as well, disinfecting many corners infested with inefficiencies, regulatory and business overhead. Imagine: an on-chain market for matching providers of (soon homomorphically encrypted) data and machine learning or business intelligence (BI) providers. This will require the enterprise to plug into open neutral blockchain platforms, because proofs need a root of truth to be meaningful.

As more and more value, data, users, and businesses join Ethereum, it will become increasingly "self-contained" as dependencies on (semi)trusted third parties (to provide data or take on-chain actions -governance etc) becomes unnecessary. It's as if the infinite garden of Ethereum is becoming infinitely fertile (soil can be (re)planted indefinitely) and infinitely sustainable (we don't need to bring water from the off-chain desalination plant -we have zk-wells to irrigate on demand).

“ZK is how Ethereum became sentient!” -future historian

Thanks for reading and have a great day.

can the issues mentioned in the figure of ethereum killers be addressed by ZK, I mean they are not necessarily zombie chains if they continue to scale.